DALL-E Prompts: Top Text-to-Image Generation in 2023

DALL-E is an innovative text-to-image diffusion model developed by OpenAI, which has the ability to generate realistic and creative images based on natural language descriptions (DALL-E prompts).

By training on an extensive dataset of text and images, DALL-E prompt leverages its deep learning capabilities to produce visuals that are both imaginative and lifelike. With a broad range of applications, including art generation, product design, architecture, and visual storytelling, DALL-E has the potential to reshape the way we create and consume visual media.

What is DALL-E?

Dall-E stands for Dali Wall E which pays tribute to the 2 primary concepts behind the technology, suggesting a mission to combine art and AI. The initial segment (DALL) is designed to bring to mind the renowned Spanish surrealist painter Salvador Dali, while the latter part (E) is connected to the fictional Disney character Wall-E, who was a robot.

Created by OpenAI, this advanced model utilizes a diffusion process to generate images based on textual prompts. Through an extensive training process on a vast corpus of text and image data, DALL-E learns to associate textual input with visual output, resulting in the production of highly detailed and coherent images.

TOP 4 Things You Can Do with DALL-E

1. Art Generation

One of the remarkable applications of DALL-E is its ability to generate new and creative works of art. By providing a textual description, users can prompt DALL-E to produce paintings, sculptures, and drawings in various styles. This feature enables artists to explore new artistic possibilities and expand their creative horizons.

2. Product Design

DALL-E can also be utilized for product design, enabling users to conceptualize and visualize new products and concepts. Whether it’s generating images of furniture, clothing, or cars, DALL-E empowers designers to explore different ideas and iterate designs efficiently.

3. Architecture

With its capacity to generate detailed images, DALL-E proves to be a valuable tool for architects and designers in the field of architecture. By feeding textual prompts, DALL-E can generate images of houses, office buildings, and bridges, aiding in the visualization and creation of new structures.

4. Visual Storytelling

DALL-E’s text-to-image generation capabilities allow for the creation of engaging visual stories. By providing textual prompts that describe characters, settings, and scenes, DALL-E can generate accompanying images that enhance the storytelling experience. This feature can be harnessed by writers, game developers, and filmmakers to enrich their narratives.

Latest versions of DALL-E: DALL-E 2 and DALL-E 3

DALL-E 2 and DALL-E 3 are the latest advancements in artificial intelligence (AI) technology developed by OpenAI, a research organization founded by Elon Musk, Sam Altman, Greg Brockman, and others. These AI models are capable of creating amazing and incredibly detailed images from text descriptions.

DALL-E 2

DALL-E 2 is the second version of DALL-E, and it is even more advanced than the first (DALL-E uses a technique called GPT-3 (Generative Pre-trained Transformer 3) to create images from textual descriptions). It is capable of creating more complex images and can understand more abstract concepts. For example, if you write “a snail made of fire,” DALL-E 2 will create an image of a snail engulfed in flames.

DALL-E 3

DALL-E 3 is another improvement over its predecessors, and it is capable of creating even more detailed and complex images based on DALL-E 3 prompts. It can even generate 3D objects and environments. For example, if you write “a 3D-printed house on top of a mountain,” DALL-E 3 will generate an image of exactly that.

Prompt: Produce a meticulously detailed 3D model of the Arsenal Football Club (AFC) logo with a resolution of 4K or higher in the form of a clock

These AI models have the potential to revolutionize many industries that rely on images, such as advertising and product design. They could also be used in the film industry to create realistic and detailed special effects.

However, there are also concerns about the ethical implications of these technologies. Some worry that they could be used to create misleading or harmful images, such as fake news or propaganda. Others worry that they could be used to replace human creativity and artistic expression.

Overall, DALL-E, DALL-E 2, and DALL-E 3 are incredible technological advancements that demonstrate the power and potential of AI. While their impact is yet to be fully realized, it is clear that they will play an important role in shaping the future of technology and art.

Who Can Benefit from DALL-E?

DALL-E finds utility across various domains and industries, catering to the needs of professionals and enthusiasts alike. The following groups can particularly benefit from leveraging the power of DALL-E:

- Artists and designers seeking new avenues for creative expression.

- Product designers aim to visualize concepts and prototypes efficiently.

- Architects and urban planners in need of visualizing architectural designs and structures.

- Writers, game developers, and filmmakers looking to enhance their storytelling with immersive visuals.

Advice for Using DALL-E

To make the most of DALL-E’s capabilities and enhance your experience, consider the following advice:

- Experiment: Explore different textual prompts and artistic styles to tap into DALL-E’s versatility.

- Iterate and Refine: Use the generated images as a starting point, refining and iterating to achieve the desired outcome.

- Combine with Traditional Methods: Consider integrating DALL-E outputs with traditional creative techniques to create unique and compelling visuals.

- Provide Detailed Prompts: Be explicit in your textual descriptions to ensure DALL-E understands your intended image generation accurately.

- Utilize Beta Features: Stay up-to-date with new features and functionalities introduced in the beta versions of DALL-E.

How to create DALL-E prompts

Creating DALL-E prompts is a fascinating and exciting process that requires careful consideration of various factors. To create DALL-E prompts, you need to follow the steps outlined below:

Step 1: Think of an Idea

The first step in creating DALL-E prompts is coming up with an idea. This idea could be anything, ranging from an object to an animal or even a scene. You need to be as specific as possible when thinking of an idea to ensure that the prompt generates an image that accurately reflects your concept.

Step 2: Write a Detailed Description

Once you have thought of an idea, the next step is to write a detailed description of what you want DALL-E to generate. Your description should include as many details as possible, such as colors, textures, shapes, and other relevant features.

For instance, if you want DALL-E to generate an image of a red sports car, your description should include details such as the car’s make and model, its body style, and any other unique features that make it stand out.

Vibrant Scarlet Lamborghini on the highway, 3D, 4K resolution

Step 3: Use Simple Language

When writing your description, it is essential to use simple language that DALL-E can easily understand. Avoid using technical terms unless they are necessary for describing your concept accurately.

Additionally, try to keep your sentences short and concise to make it easier for DALL-E to parse your description quickly.

Step 4: Experiment with Different Prompts

One of the best ways to create effective DALL-E prompts is to experiment with different options. Try writing various descriptions and see how DALL-E interprets them. This way, you can refine your descriptions and improve your prompts over time.

Step 5: Evaluate Your Results

After generating an image from your prompt, it is essential to evaluate the results carefully. Analyze how well the image aligns with your original concept and identify areas where you can make improvements.

If the generated image does not accurately reflect your idea, consider refining your description or experimenting with different prompts until you get the desired result.

In summary, creating DALL-E prompts requires careful consideration of various factors, including your ideas, descriptions, language, experimentation, and evaluation. By following these steps, you can create effective DALL-E prompts that generate accurate and exciting images.

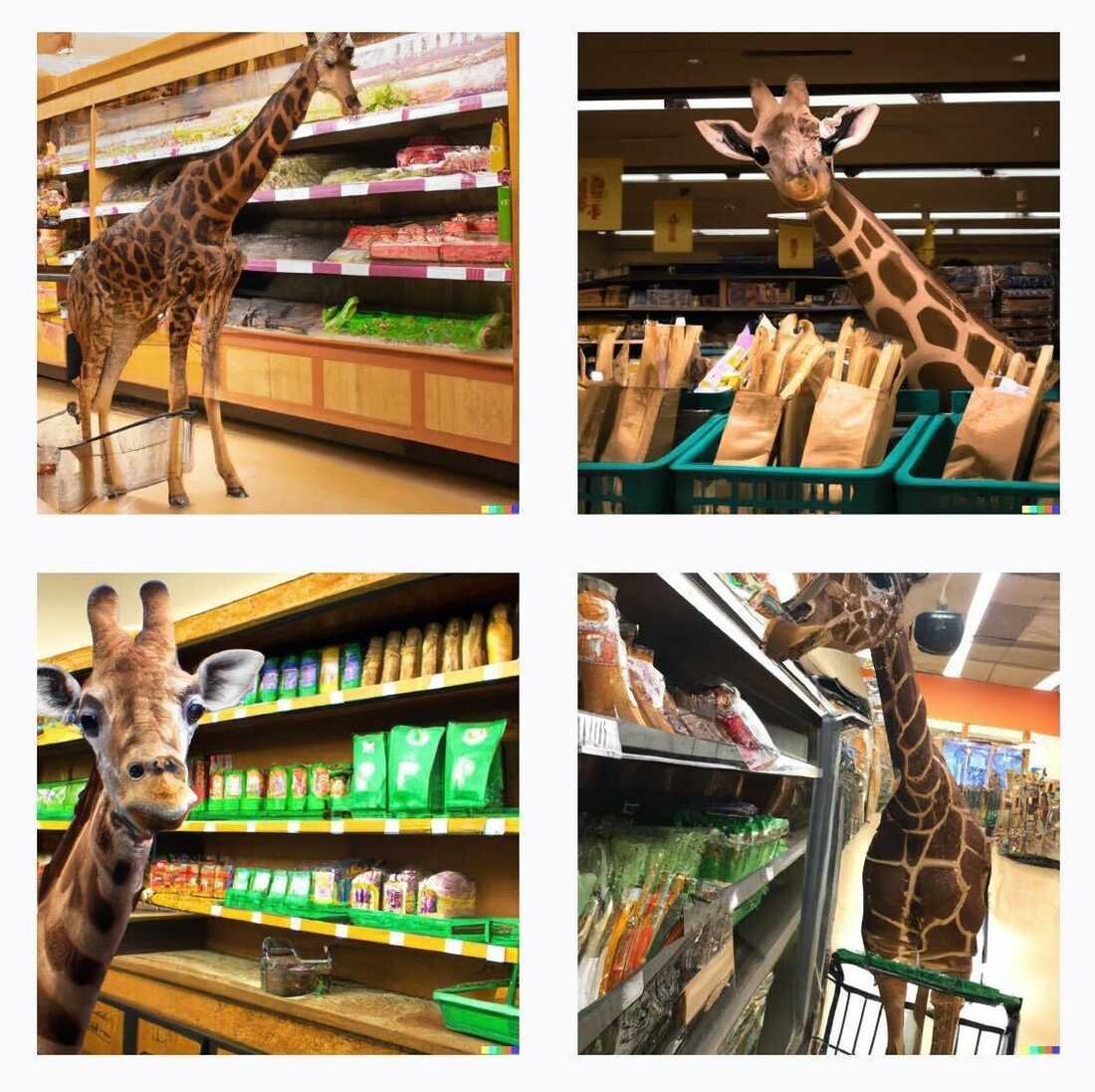

Examples of DALL-E

To illustrate the potential of DALL-E, here are a few examples of how it can generate various visuals based on textual prompts:

- A painting of a golden sunset over a tranquil ocean with vibrant hues.

- An avant-garde sculpture of intertwining metal rods that defy gravity.

- A futuristic office building made entirely of glass and steel, reflecting the cityscape.

- A whimsical character with colorful feathers, a playful smile, and eyes that sparkle with mischief.

DALL-E, MidJourney, and Stable Diffusion

Here is a comparison of DALL-E, MidJourney, and Stable Diffusion based on the gathered information:

| Aspect | DALL-E | MidJourney | Stable Diffusion |

|---|---|---|---|

| Developed By | OpenAI | Midjourney, Inc. (San Francisco-based independent research lab) | Stability AI, CompVis, and Runway, supported by EleutherAI and LAION |

| Initial Release | January 5, 2021 | July 2022 (open beta) | August 22, 2022 |

| Technology | Transformer language model similar to GPT-3, capable of accepting text and images as a single data stream with up to 1280 tokens | Generative AI, Interactive machine learning to generate images from text descriptions with a focus on painterly aesthetics | Latent Diffusion Model generating images from text and image prompts, based on diffusion technology and using latent space |

| Image Generation | Generates digital images from natural language descriptions, called “prompts” | Generates images based on natural language descriptions, called “prompts” | Produces unique photorealistic images from text and image prompts, also capable of creating videos and animations |

| Public Accessibility | FREE | Paid | Open source, code, and model weights have been released publicly and can run on consumer hardware with modest GPU |

| Unique Features | Trained to produce each token one at a time using maximum likelihood | Focus on creating art with painterly aesthetics | Photorealistic image generation, open-source nature, capability to create videos and animations |

DALL-E, MidJourney, and Stable Diffusion are all AI models capable of generating images from text descriptions, also known as “prompts”.

While DALL-E and MidJourney are similar in their basic functionality, Stable Diffusion differentiates itself with its open-source nature and the ability to create photorealistic images, videos, and animations. Each of these models showcases the advancing frontier of generative AI in the domain of image generation from textual input.

DALL-E 2’s created images

MidJourney’s image sample

Stable Diffusion’s created images

5 FAQs about DALL-E

1. Is DALL-E available for free?

As of now, DALL-E is not freely accessible. OpenAI currently charges a fee for its use, but they have plans to increase availability in the future.

2. Does DALL-E have an API?

Yes, DALL-E offers an API. While it is currently in beta testing and limited to select users, OpenAI intends to expand access to the API over time.

3. What is the pricing structure for DALL-E?

The pricing details for DALL-E are not publicly available at present. As OpenAI continues to refine the model, they will finalize and announce the pricing structure.

4. Where can I find the DALL-E paper?

The DALL-E paper was published in the journal Nature in January 2021. It provides an in-depth understanding of the architecture, training process, and achievements of the DALL-E model.

5. Is DALL-E available in Bing?

Yes, as of October 2023, Microsoft announced that DALL-E 3 is now accessible to everyone within Bing Chat and Bing.com/create for free.

Conclusion

DALL-E represents a significant leap forward in text-to-image generation, offering a groundbreaking approach to transforming natural language into visually captivating images. With its potential applications in art, product design, architecture, and visual storytelling, DALL-E opens up new possibilities for creative expression and innovation.

Despite not currently being freely available, the future advancements and wider accessibility planned by OpenAI make DALL-E an exciting technology to watch as it continues to redefine the boundaries of visual media.